There is no wonder why automation testing has taken a front seat. There are numerous benefits that we see when we invest time in automation testing (ONCE). The main reason I personally love automated testing is because it helps us to quickly know if something is broken (Fail Fast), makes the dev team keep marching forward and makes team fearless during refactoring.

Anyone who is involved in automation testing might be aware of the below pyramid (Without Mummies) that shows the layers of testing and how, as we climb up the pyramid layers, our tests gets slower in execution and gets expensive too.

In last couple of years I got a privilege to work with multiple teams where I saw almost all teams having knowledge of the testing pyramid and spent good amount of time in automation testing. But, during every code reviews we could also see the efforts put by team were considerable in correcting earlier test cases. Digging further we found few things were not done quite right,

- Tests that should have been as part of one layer where found in a different layers

- Not all code was covered in the core modules (How to find core modules?)

- Redundant tests across layers

- Test classes were not refactored (Will cover more in another article)

“Automation Testing is a life saviour, ONLY IF ITS DONE RIGHT. If done wrong, it introduces maintainability issues, false positives, consumes more time in correcting tests which overall hampers the productivity of the team.“

Why multiple layers?

While working on enterprise software’s, not all test cases can be covered in one layer that is the only reason we need the next layers. So if we can test something in a below layer then lets not test it again in the above layers.

In automation testing the focus should be on coverage. If we could entirely cover in one or more layers then that’s sufficient. For eg: Consider a small product bundled as one exe, we can use only functional testing layer and ignore all other layers. So number of layers changes based on the requirements, intent remains same.

“Starting from bottom layer, find what could not be tested in the layer and then introduce a top layer and have tests around the missing cases”

Best Practices

Let us try to find few best practices that can be used in each layer to keep things clean

Unit Testing Layer: The super fast and cheap

- Be consistent with naming the test cases, to understand what failed when some test fails. I recommend <MethodName>_<Scenario>_<ExpectedResult>

- Use Arrange Act Assert syntax in the body

- Try to get to 100% (at least close to) with Unit Testing. In fact use TDD to cover everything.

- No test cases on private functions. Private function called within public functions should be tested by calling the public functions only.

- Have ways to check the coverage while developing (a simple command line generating coverage results and showing non covered lines).

- Body of the test cases should be small. If test cases are getting bigger, refactor the class.

- The test class should change only if there is a change in the class under test. So, mock everything outside the class.

- Create wrappers and interfaces for OS calls like DateTime, FileSystem etc so that edge cases can be written around them.

- Create wrappers and interfaces to convert asynchronous operations to synchronous to avoid waiting (Thread.sleep) before assertions.

Functional Testing Layer: The Requirements Verifier

- When stories are groomed with the team, and PO’s define the functional requirements and architects define the technical requirements, both should be converted to Given-When-Then syntax that will be the test cases in this layer.

- PO’s should be able to see this layer and understand what is being done and help team to refine test cases. Hence, use Ubiquitous language.

- The test class should change only if there is a change in this component under test. So, mock everything else outside the component.

- Test for negative flows only at the boundaries with mocks (eg: if infra is down and web api gets a request), since negative flows within the component is taken care in Unit Testing.

- If testing web api’s also assert the response structure to indicate if team has introduced any breaking changes and needs a api version update

- Reuse wrappers created in Unit Testing Layer.

Integration Testing Layer: The Infrastructure Verifier

- Primary test cases should be around “When real infrastructure like Containers, DB, Message bus, Auth etc are provided is the component using the infrastructure and preforming its job as expected or not?”

- Example1: Passing correct and wrong auth token should give 401 or 200 in case of web api , confirms that auth infrastructure is used correctly.

- Example2: In a web api call POST and assert GET results to see if data is stored in database correctly or not.

System E2E Layer: The End User

- Primary test cases should be around the end users scenario.

- If end user will be using UI then have UI tests.

- If end user will be using API then have API tests.

- In case of enterprise software, the tests should onboard customers and run the end user scenarios and delete the onboarded customers which also suggests having onboarding/deboarding process through API’s.

Cheat Sheet

| Test Layer | Branch | Intent of Testing | Testing Scope | What to Mock? | Testing syntax |

|---|---|---|---|---|---|

| Unit Testing (Dev machine & Build Machine) | Feature Branch | Does the public function work for ALL positive and negative scenarios? | Public Methods/Functions | Everything external to the class | Arrange – Mock others for asserting right. – Initiate class and inject mocks. Act – On Public functions. Assert – For Every detail. – On Return values. – On calls going outside the class. – For datetime test in between days, weeks, months and years. |

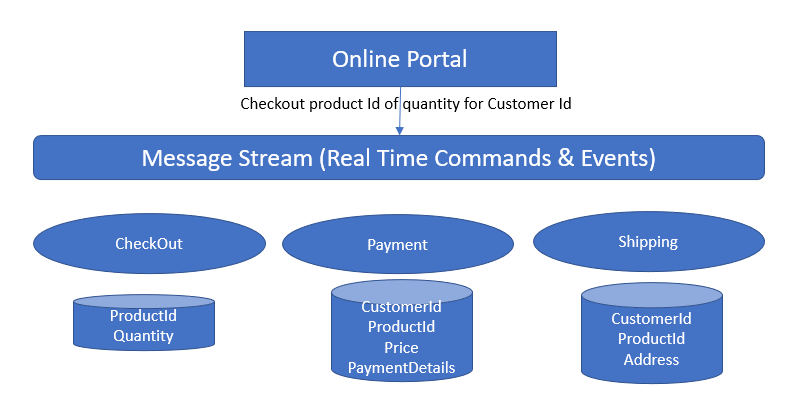

| Functional testing (Dev machine & Build Machine) | Feature Branch | Are all the scenarios/Business case met? How does this component behave even when any external dependencies are down? | Component (Service, Web API, DLL, JAR etc.) | Everything external to the Component (Infrastructure like DB and Message Bus, other services etc.) | Given – Mock infrastructure/ other service calls. – Initiate the component and inject / Override with mock. When – Trigger the scenario ( Call Web API/Invoke event in case of EDA). Then – Assert if relevant calls are made to repositories for read-write / calls to message client for publishing new event (EDA). |

| Integration Testing (Post Deploying to Dev environment) | Feature Branch | Is the component working when real instances of Infrastructure are provided? | Component (Service, Web API, DLL, JAR etc.) Infrastructure – Database, message bus, Auth | Nothing | Given – Set Prerequisites before the call When – Trigger the component scenarios Then – Assert sync/async responses |

| System Testing (After any new deployments in a bounded context) | Master/ Trunk | Is the End to End Business case working when all services are up and running | Bounded Context (Multiple services, containers and dependent infrastructures) | Nothing | Given – Set Prerequisites before the call – Onboard customers if its part of business process When – Trigger the end users scenarios Then – Assert end user expectations – Deboard if onboarded |

Happy Learning

Codingsense